AI shills Talking Points vs Legal Reality

Folks are online arguing they're "AI artists" and "AI writers" - while using work stolen from creators. Let's look at their excuses.

These main headings are the 6 top specious arguments I see most often from apologists for the AI industry, and since this is a long article I’ve linked the sections below so you can jump directly:

I want to call these out. But I’m not just shaking my fist at the clouds, I’m arguing for strong regulation — which AI companies are fighting. See this post highlighting the need for AI guardrails for more on that.

AI Training is like what People Do

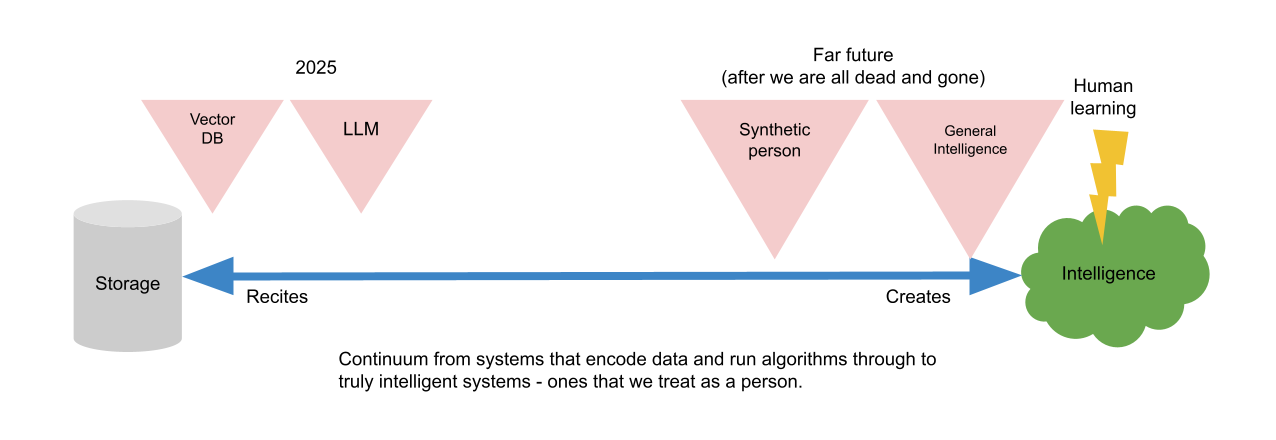

My opinion: “Training” as used for hill-climbing type incremental setting of neural networks weight values is a Term of Art created by computer scientists.

In other words “training” an AI is a metaphor not a reality, it’s meant to evoke an idea. It’s not literally “training” as we humans understand it. All the detail about hill climbing and error terms is complex so we use this shorthand word “training” although the AI process is nothing like what humans actually do.

AI model weights being adjusted over time during model building is a form of lossy encoding. It’s akin to how we store frames of video into an MPEG container file, and tolerate some small errors as long as human perception cannot see them.

It’s an important concept and I wrote about it in this Substack article.

AAP Lawyers say AI is Encoding

In a recent court case against Meta, known for social media platform Facebook, an Amicus Curiae brief was filed by The Association of American Publishers in Court in California 11 April 2025 which says in part:

II. Meta’s Mass Appropriation and Exploitation of Expressive Works to Train Llama Was Not a Fair Use

A. LLM Training Is Not a Transformative Use of Copyrighted Works

1. Training Is Not “Learning” About Works, But the Encoding of Expressive Content

Meta’s claim of fair use is largely predicated on two false narratives.

Seeking to establish that its exploitation is “transformative” under the first fair use factor of section 107 of the Copyright Act, Meta misleadingly portrays the LLM training process—in which works are systematically reproduced and ingested into Llama word by word—as merely recording “statistical information” about the works rather than capturing the content of the works. The LLM algorithmically maps and stores authors’ original expression so it can be used to generate output—indeed, that is the very point of the training exercise.

and

Contrary to Meta’s claims, there is nothing transformative about the systematic copying and encoding of textual works, word by word, into an LLM.

Word by Word

AI models like GPT 4.5 or LLAMA 4 are like a storage facility in that they contain data. That data bounds what it can produce. AI doesn’t have the ability to create ex nihilo1.

Right now AI image generators are interpolating between pieces of stored images, in the way analogous to the old face morphing technologies:

Humans cannot remember every detail of a photograph or painting; or complete tracts of text from thousands of books. AI can do that. That stored information is where AI gets its outputs from.

We know instinctively that it’s wrong, but there are people still defending AI by making this “training” claim. LLM’s are in fact at the storage end of the spectrum, not the intelligent end. They contain a lot of information but no smarts.

The nail in the coffin for the “it’s training” argument is that you can literally get AI models to regurgitate tracts of text out in response to crafted prompts. AI companies view this as an exploit and try to stop it, but it’s been repeatedly shown by researchers that they do memorise and regurgitate text of creative works.

This shows that the Amicus Curiae argument above regards copying is correct.

People Cannot Tell the Difference

This is a stupid argument for virtually anything you might apply it to.

Counterfeit $100 notes

Fake Rembrandt pictures

As creatives we sell our work based on our authenticity. Fake AI slop that pretends to be real, can fool some folks, and that is not on people who in good faith believe that stuff to be real.

Humans who are not creatives are not expected to know the real thing after a quick cursory inspection and they should not have to. This is a completely dishonest and corrupt idea.

Anyone saying “No-one can tell” doesn’t understand the concept of provenance. You can make a fake Rolex, and it doesn’t matter how good it is at telling the time, it’s not a real Rolex.

If I see an image created with paint and a paint brush I can read the artists statement, maybe at a gallery, and marvel at how the artist made my eye believe there was a field of flowers with a few spots of paint.

The point is not the pixels. It’s the provenance.

That is worth more than any AI fake image of a field of flowers. The point is not the pixels. It’s the provenance.

What is the Harm

The model is already trained right? The art is already stolen. What is the harm in someone having some fun making a doll picture of themselves?

Using AI to generate images:

boils the planet

enriches oligarchs

traps developing world workers in exploitation

creates a cycle of continual destruction of IP

This YouTube documentary — already reference above — features Zhang Jingna’s photography work and how she was hounded by AI incels for pushing back when her work was stolen to be used in countless “Asian girl in the style of Jingna” prompts, directly stealing from her Intellectual Property.

In books the same problem occurs when pirates take books and then unethical AI companies cynically use pirated books in creating new AI models. AI companies are only able to do this because people keep using the data that the companies supply.

If you don’t use fraudulently obtained data from unethical AI companies then their count of MAU - monthly active users goes down, and that is everything to a startup.

When I challenge people online about AI usage sometimes I get a nice result where they genuinely did not realise the problems with what they were saying and doing. Sometimes we agree to disagree. But there are also some who are flat-out really horrible people.

If you’re a defender of AI look around at who you’re on the same side with. The Mikkelson twins for example, and scammers who know they’re ripping people off and revel in that.

You cannot stop it

A non-argument. It’s basically “stop arguing, you can’t change anything” - so not addressing any factual argument at all.

We can also dismiss this one by simply examining the form of this “argument”.

Tidal waves, Gonorrhoea, Domestic violence… you can’t stop it.

No, but if it’s bad, and you can do something to lessen the impact of that bad thing then ethically it behoves you to do that.

My position (see the top of this article) is that AI must have regulation. This is something the AI companies are fighting by trying to cast themselves as innovative geniuses pushing technology forward and anyone in their path as luddites who don’t understand the technology.

In fact under this completely dishonest and fatuous argument I’d also place:

You don’t understand AI

You can’t stop progress

You just need to learn AI

You’ll be replaced by someone else using AI

…and all the rest.

Go here to see my credentials in particular. I am no expert on AI but I have found in general I have a better understanding of it than some of the folks I encounter.

AI is not a new technology. The transformer architecture, and the attention algorithm are innovative; but the real “innovation” was large scale theft of data, creating models with billions and billions of parameters.

This “you can’t stop it” thing is just a way to insult and attack a person, without actually addressing what they’re saying.

Edgelords are on social media dissing artists, saying they should just quit and get a job at McDonalds. In a video by Mohammed Agbadi about “AI artists fixing real artists work” he shows this guy in the screenshot above telling artists to quit and be a plumber. Mohammed’s whole video is instructive if you want to know what non-artists in some cases think of art. Basically to some AI is a big “f*$k you for having skills” weapon against artists.

Its just a tool

Often the people online saying this contradict themselves in the next post or even next sentence.

The long form (probably AI generated) “School Debate Club” style essay entitled “The Final Argument: AI-Generate Images Are Art” says it will oppose the claim AI can’t make art because it lacks consciousness.

The author says:

AI is not devoid of human input.

…and then — without a trace of irony — he goes from that to:

The AI is a tool, like a paintbrush, a camera, or a chisel.

So which is it? A) human/artistic/conscious/whatever or B) a tool like a chisel?

To be honest that whole word salad is free of any actual meaning.

Or are they trying to say it’s the human input that makes the difference? If that’s true then the proposition AI-generated images are art that the whole essay is trying to prove is out the door, because it’s human input not AI that causes the art.

Obviously Not Just a Tool

Honestly trying to discern any actual train of thought or cogent argument from these “ai is a tool” people is hopeless. And we should not bother because AI is not a just a tool: it’s got to be one of the least “tool like” things on the planet.

Generative AI LLM’s are Chat GPT 4.5 are giant models that have billions of parameters requiring whole data centres to run. Huge teams of engineers work on deploying, updating and managing these, and they are costing trillions of dollars in investment. And they rely on massive troves of stolen data. Millions of books for example.

AI data centres cost billions of dollars to run. Each time you run a query the real cost is subsidised by investor money, and requires a gallon of cooling water for a postage stamp of imagery.

You can connect to it over the internet from your phone or laptop, but ChatGPT, LLAMA or Claude - these things are monsters. It can do the homework of thousands of kids, while summarising a thousand articles all while spewing out dozens of derivative works from stolen copyrighted book or pictures; all in the same minute.

It’s like saying an aircraft carrier is a tool.

It's about as opposite from being "just a tool" as you can get.

AI is Not Like Photography

Shills say: When photography was first invented, it was seen as not an art form. Here’s an example of it in the wild:

The long form (probably AI generated) “School Debate Club” style essay entitled “The Final Argument: AI-Generated Images Are Art” that essentially argues the same thing. The form of the argument is:

People say AI images are not art.

But AI is like photography.

Photography was rejected as art

AI will therefore be accepted as art

Here are the problems with it:

Begging the Question

Today everyone in their right mind accepts that photography is an art form. Mapplethorpe’s portraits are in the Tate gallery so it’s common knowledge that it’s possible to create art with a camera.

So by saying in 2. above that AI is like photography they are trying to smuggle in the conclusion of the argument into the premises!

Further there’s no proof of AI is like photography offered by the people who argue this.

They also don’t prove item 3 - photography was rejected by the art community - beyond some quoting Baudelaire or some others, but there’s never any demonstration that widespread grassroots art communities, galleries and society in general rejected photography.

Analogical Argument

Using analogies in discourse is illustrative, in general. You have to prove all the points in the argument still. At best it can suggest an outcome, providing some support, but it’s inconclusive. And it’s especially so when you never prove the likeness in the first place.

Art Definitions are a Red Herring

But all of this is subterfuge designed to get us drawn into an argument about what is art and what is not, all to divert attention from the arguments most glaring problem.

Say for a moment that the output from AI is art: that does not entail that any person who may’ve initiated it is an artist. If they could prove the output was art, they still haven’t proven that the person who initiated it is an artist.

Say the output from AI is art. That does not entail a person who initiated it is an artist.

The AI images are art guy says - in his big long list of definitions of art:

AI-generated images, presented as art, fulfil this criterion by their very exhibition.

No quarrel with that. I think artists could use AI if they want. They can use a banana so why not AI?

People have playfully presenting AI images in galleries and submitted them to art competitions:

Berlin based photographer Boris Eldagsen created an image using AI

It won a photography competition, but Eldagsen did not accept the prize

He’s shown in a YouTube documentary describing himself as a Promptographer - he gets some interesting images from AI

Sydney photographer Jamie Sissons heads an Art Studio which created an image

Under a fake name the image won a local competition

Jason Allen entered AI pieces into a Colorado art fair in 2022 under the “digital arts/digitally-manipulated photography” section and won a $300 prize

The first two are both cases where someone self-described as a photographer got an image from AI and won a photography competition with it. These people were artists before they took an AI image to a gallery or art competition.

So something else is determining the images “art-ness”, not the AI.

In these the AI involved was not the thing that made the images into art. Boris Eldagsen person - an artist - used AI to make something - then presented it as art, giving a story as to why he made it. His artist statement. So something else — the artist and the provenance — is determining the images “art-ness”, not the AI.

Allen stepped into artists shoes

Colorado State Fair’s fine art competition allows any “artistic practice that uses digital technology as part of the creative or presentation process”. Allen used Photoshop to add a head to one figure whose head was missing.

when another user asks if he explained what the software does, Allen replies, “Should I have explained what Midjourney was? If so, why?” eliciting face-palm emoji reactions from others in the chat.

AI made something which was presented by Allen as art, but the judges were not aware it was AI (not Allen) who made it. This was a case of something looking like art, being presented as art, and judged as art. But Allen obscured the provenance of the piece. He made some small alteration to a piece that came from AI (from other people’s work).

In my opinion Allen was a scammer. AI is not “just a tool”. It is data centres full of other people’s work. He took that work, diffused it into an image and presented it as his own - he should have revealed the true provenance so it could have been judged correctly.

Logical Fallacies and AI Art proponents

If AI outputs were art form that would not prove that the person pursuing it had talent.

You’d want to see them produce art with that art form first. Picking up your cousins Stradivarius violin every day for a month while they’re away on holiday, doesn’t make you a concert violinist even if you get some kind of noise out of it.

This whole idea of “what is the definition of art” and “who gets to decide” — it’s all misdirection. It’s logical trickery. Logical fallacies.

Here the claim is if an AI produced an artwork, that necessarily entails that the person who initiated the AI production is an artist.

Claim: if an AI produced an artwork, that that necessarily entails that the person who initiated the AI production is an artist

Let’s have a quick look at another logical fallacy. The false syllogism, a form of false argument. For example all cats have four legs; does not mean anything that has four legs is a cat.

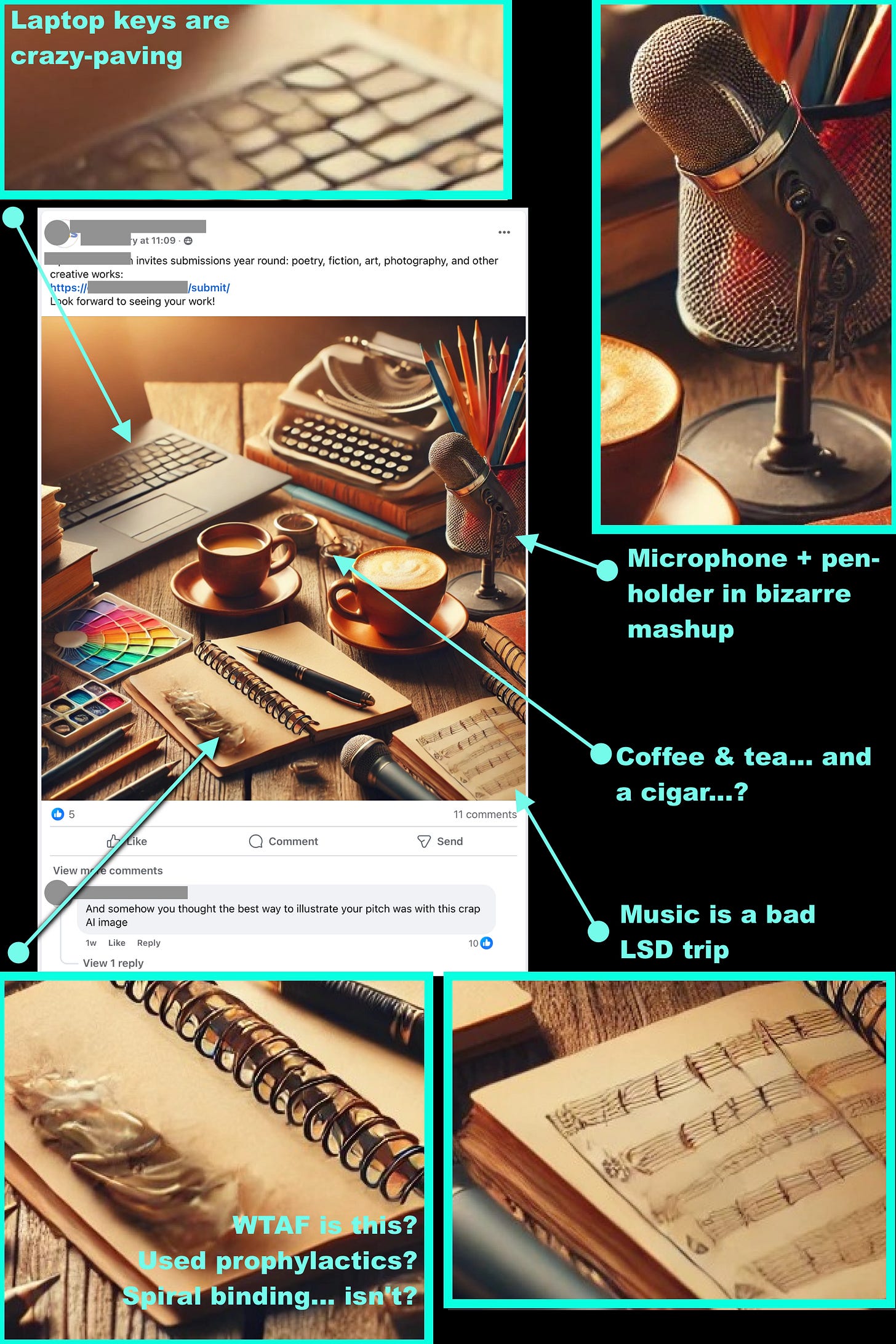

To make this clearer, we know that sometimes people make AI images for other reasons than art. So it’s not true that all AI images are automatically art. Here’s an analysis I did of an AI slop image made for the purpose of selling someone’s services on Facebook. The AI slop image was for sales so not intended to be taken to a gallery or entered in an art competition.

So: proving some output from AI was art doesn’t get you anywhere because there are plenty of examples of outputs from AI that their authors freely admit are not art.

False statement: AI images are art (some are not)

Check my other article under the heading “AI fakes are used to Rob People” for more examples of AI images that are not art.

Stepping into an Artists Shoes

Say on some occasion - like Allen winning the art competition above - the output from AI is art. That does not entail a person who initiated it (Allen) is an artist.

Here is our excluded middle, no matter what you define art as. Let’s imagine we agree on some definition of what art is. That definition — call it d — is known and we have a person A (Albrecht Durer say, an acknowledged famous artist) who is about to produce a piece of output that complies in every meaningful way with art definition d.

We don’t have to know what d is, just that A knows it intimately and has a system set up to produce an output2. A does all the work, readies the system, then leaves the room for a minute, and a bystander B (Durer’s house cleaner) walks in and presses the button, and takes the output (the printed engraving above). B does not know d (art). They could not build the system, but they can see enough to crank the handle.

A person does not become the artist by virtue of extracting the output

Here B did not become the artist by virtue of extracting the output3. They were just taking the outputs of other’s work. It’s a Searle’s Chinese Room setup where B is in the Chinese Room and is not the artist.

Photography something, something… AI image makers are Artists?

No it doesn’t follow. What is conclusive is that people saying “AI images are art” are failing to meet their burden of proof.

What is meant by AI images are art? Does it mean:

“AI images win art competitions so AI images are all art” or

“I’m an artist if I cause any AI image”

“I made AI images and I say they are art so I am an artist now”

Regardless, Art Definitions are a Red Herring and Stepping into an Artists Shoes show that even if the output from AI is art, that does not entail that the person who initiated it is an artist. Your definition of art doesn’t matter.

This is because there is an excluded middle, another explanation. The true provenance, the origin of the image, is “from the AI’s data”. Pieces of art were in the AI and they are smooshed together by the morphing algorithm.

Vibe Coding for Art

The AI does not have a conscious idea of what code it is writing, its following its context and prompts.

A photographic artist (like those two above) might work with the AI system to produce a consciously intended image. Its possible.

But I think what happens in some cases is that people use few prompts and vibe around a lot, then convince themselves that what came out was their imagination.

I don’t care how many prompts you used, it still came out of the generative AI system. From other people’s work. Every pixel in that image was from a data centre somewhere.

What is People’s Motivation When Creating AI Images?

Eldagsen says he and his mother saw old pictures including ones with his father, and wanted to capture that feeling. He says that sometimes “the AI comes up with good ideas” — those are in fact from the data set the AI model was built with.

But I think AI poisons any artists creative vision. I think the data set can lead folks to any place, but only ones it’s been fed from other peoples images. And as well as artistic images there are bad images.

I think they feel free to indulge their worse impulses

I think people using AI to create images are very poor judges of the merits of the image. I think they feel free to indulge their worse impulses at times.

Witness this:

We know that AI was fed pornography due to the work in Madhumita Murgia’s book Code Dependent where she talks about the developing world workers and others who are subjected to horrible images when labelling training data. We know that AI systems were training on images of children, and on child abuse imagery. AI companies claim this has stopped, but they’ve also gotten rid of safety protocols and staff responsible for stopping it.

We know people are using AI to create pornography including of underage targets for example in the GenNomis database:

The publicly exposed database was not password-protected or encrypted. It contained 93,485 images and.Json files with a total size of 47.8 GB. The name of the database and its internal files indicated they belonged to South Korean AI company GenNomis by AI-NOMIS. In a limited sample of the exposed records, I saw numerous pornographic images, including what appeared to be disturbing AI-generated portrayals of very young people.

Conclusion

My position with AI images and art is that AI doesn’t make you an artist. I think it’s possible for a few AI using folks to make art with it. For any given person claiming to be an artist, using AI, I am ready to be convinced given evidence. I would want to see provenance and an artist statement.

AI training is the same as people learning

FALSE

No-one cannot tell the difference

FALSE

What’s the harm

LOTS

You cannot stop it

YOU CAN REGULATE

Its just a tool

ITS DATA CENTRE’S FULL OF STOLEN IMAGES, NOT A TOOL

AI is like photography

PROVE IT

What I would like is for readers to support regulation of AI.

Thanks for reading.

Some AI can use Retrieval Augmented Generation or RAG to fetch information outside of the encoded model, feed it through the inference and get it out as part of the generation. This is how it deals with current events using news sources for example. I don’t think AI image generation is using this directly to create images, but it might use it to make sense of a prompt. I don’t know for sure.

A row of dominoes, ropes and pulleys, a player piano where they have punched holes in the paper tape themselves; whatever, it’s some turnkey system.

We agreed on d - the definition of art - it doesn’t matter what it is, so we know it would have been art had A done the final act required to realise the requirements of d.