Six Utterly Compelling Reasons to Stop using AI Right Now

Stealing peoples work, boiling the planet and enriching oligarchs is only the start. Generative AI is a net negative you don't need. Ditch it and start feeling better.

There’s AI that relies on other peoples work unjustly in an attempt to steal from workers and then commoditise the stolen proceeds. I'm not talking about anti-capitalism, I'm talking about flat-out wage theft. Don't tell me about how "useful" it is. Before abolition plenty of folks found not paying for labour useful.

1. Wage Theft

Wait?! Are you a Commie? Wage theft? That’s a reason?

Laws mandate that employers have to pay employees. In Australia the law is $24/hr. If you don’t pay workers that is non payment of wages and it’s a crime, here in Australia.

But regular (non-AI) employers trying to get around their legal obligations is a billion dollar heist that is going on right now. So — in general — companies stealing from employees is unlawful but profitable.

Fascist countries or countries where the populace is enslaved, trafficked and live in grinding hellish poverty don’t have laws like this. I don’t want to live in a place like that. How about you? No? So let’s have some basic laws about my body, my labour; and laws must come before profits. That’s not communism, that’s human rights.

So if that’s the way it is for others, why not AI companies? Somehow for them, wage theft has been turned into a trillion dollar industry.

But when it comes to AI, wage theft has been turned into a trillion dollar industry.

Large tech companies like OpenAI are using dirty tactics to push out their flouting of labour laws to "Legally distinct entities", and those are exposing workers - including children - to horrific conditions.

Didn't know about this? Sort of knew but haven't had time to catch up with it? There's YouTube videos of a German Documentary.

Is it super cool that AI can make a picture for you? How many exploited workers had to look at images, some horrific, so that OpenAI could file off the serial numbers from other peoples art work and serve it up to you?

AI labelling companies - some created by tech execs who exited the Silicon Valley AI companies they now on-sell to - are stealing from employees & trying to dodge the law to do so. It’s wrong when other companies do it, it’s wrong when AI companies do it.

2. AI Enslavement

In Madhumita Murgia’s book Code Dependent: Living in the Shadow of AI the author says:

For OpenAI the creator of ChatGPT, Sama’s workers were hired to categorize and label tens of thousands of toxic and graphic text snippets - including descriptions of child sexual abuse, murder, suicide and incest. Their work helped ChatGPT to recognise, block and filter questions of this nature.

This is truly awful; first that workers like Ian and Benja who Madhumita Murgia report on in her book, have to be subjected to that. But second that this is the use that these systems are being put to. Generating child porn, revenge porn, hate imagery and so on is a massive part of the traffic of these systems.

They’re supposed to elevate humanity to new levels, and instead they’re gratuitously supporting the worst impulses of the dregs of us. And its continuing despite AI companies supposed filtering prompts via “patches” to stop it.

But aren’t companies like Appen and Sama providing employment for folks in developing nations? Lifting them out of poverty?

No. Here’s Murgia:

The arrangements of a company like Sama — low wages, secrecy, extraction of labour from vulnerable communities — is veered toward inequality.

What it would look like if they were actually providing employment - 1st world nations would have these jobs, under the rigorous labour laws that reflect actual human rights that we have when our lives are not under threat from warlords and despots. And then, only when the jobs were in existence here - in places like Australia and the USA - would the same jobs be made available to workers in Kenya.

It’s fine to adjust the pay rates to reflect lower costs of living — I get that here in Brisbane, Australia compared to New York or even Sydney. But it’s not OK to pay slave labour rates, and adopt extreme gig-worker conditions where the work is there today and gone tomorrow.

Sama AI was originally a not for profit, but once it got up and running like OpenAI itself, it’s so easy to just turn the screws. Now these companies operate under incredibly strict NDA’s that make it harder and harder for journalists to expose trafficking and exploitation of workers. Patch the outputs instead of fixing the problem. Are you sitting there thinking “So it’s Kenyan folks being exploited… and I get free stuff?”

Firstly, I really hope my readers have an ethical bone in their body. Second, AI systems like ChatGPT are a mechanism to transfer wealth into the hands of a tech oligarchy. That mechanism can and will turn to extracting labour from you & me. Don’t believe you will always be the beneficiary, those screws can turn on you as well.

And the arms-length companies being setup to do this are full of the same people who worked in the tech companies to begin with.

3. Don’t “Not all AI” me bro

Apologists, PR wonks and crypto bro types trying to pump their NVIDIA stocks are all up in my mentions complaining in a dozen different ways that I’m wrong, I don’t know what I’m talking about and AI is everywhere.

While most people in my socials agree that AI outputs are awful, and they are sick and tired of the dreck that AI outputs clogging every forum we use, there’s usually a few weirdos who pop up with some schoolyard debate tactics they want to try out.

Don't bother - I have 20 years in tech, founded a startup that used AI, I am ex-Google, ex-Canva; years of consulting and working with AI and tech. Have a look at my profile.

I know what I’m talking about.

The AI is everywhere stuff is BS. A number one bad faith argument technique that AI defenders try is to make a false equivalence around the systems that are for example built into our cellphones; and the AI run in the cloud by OpenAI — you know, the one that uses child labour to sift through pornographic content.

Its a false equivalence because, a system — for example — like the Apple Image API that can tell you if a photo on your phone has faces in it does not require transmitting that image data outside of the phone, and relies on very simple machine learning models.

I suspect Apple’s model is based on Haar Cascades which is a technology available in OpenCV and was first published in 2001. Its not “AI” - I would term it Computer Vision, rather and AI.

It’s a classifier. It’s not generative. The primary tech is this simple feature extractor. No exploited Kenyans suffered to build this thing.

These jerks are trying to blur the lines to say my iPhone camera is the same as a billion parameter model running in the cloud, with petabytes of people’s work stolen off the internet. True, Apple is now trying to put OpenAI on my iPhone. I have turned all that off, and so should you. You should not install the OpenAI app either.

But it’s just wrong to say there’s some huge unwashed sea of AI technology and its all the same, and you cannot discern differences to criticise one part of it. That is just absolute garbage talking points of AI defenders. We know instinctively that an app that is “creating” passages of text or images is getting that from somewhere. It doesn’t just come out of nowhere. So don’t allow bad faith actors to argue this BS line of reasoning.

When I talk about AI creating content from labelled data, that's generative AI, using large language models. So don't "not all AI" me. Don't even start with "AI is on your phone". I built AI systems for phones, and am a patent inventor. The classifiers, assistive tech, search systems and gizmos running locally a phone are not gen AI.

4. Billionaires are Boiling our Planet and Enslaving Us - Just Say “No”

So if you are OK with stolen wages, and still want to use AI to steal other peoples work, consider this: Elon Musk's xAI is a huge proportion of his wealth, and with the government contracts he is eyeing (taking) it will be more than the $75bn it is now.

The main products to use OpenAI, like Dall-E, CoPilot, and ChatGPT - and a million shitty little startups that are a brain dead wrapper around OpenAI's APIs - all could plug in xAI instead. If Musk gets his way there's a good chance that will happen.

Prediction:

1 years time, Musk wins his suits against Sam Altman and OpenAI, and the company's shares drop.

Musk acquires OpenAI after he orders the SEC to drop any investigation into the merger.

Prices for usage of the API's quadruple and Musk blames electricity prices:

ChatGPT Free > $30/month

ChatGPT Plus > $80/month

ChatGPT Pro > $800/month

Divest. Divest. Divest. You are better than this.

You don't need it, and you never did.

5. AI’s business model is Theft, and its outputs are plagiarism

Lastly I want to talk about theft of other peoples work. AI is built on stealing other peoples work. That's its business model. Your cool "generated" artwork or whatever is not coming out of some clever artificial brain. Its other peoples work.

With the serial numbers ground off.

How do I know? Can I prove it?

Gen AI in the form of LLM's especially the very large ones, require petabytes of other peoples work to be fed into it. That’s accepted. Even by Sam Altman.

AI researchers coined the term "training" for this process.

It's not training, it's storage. Encoding would be a better term.

A "trained" AI is a collection of weights, numerical values. The original data is in there, but the companies don't want you to know that.

It’s a Lot Like Video - Its Encoded, not “Trained”

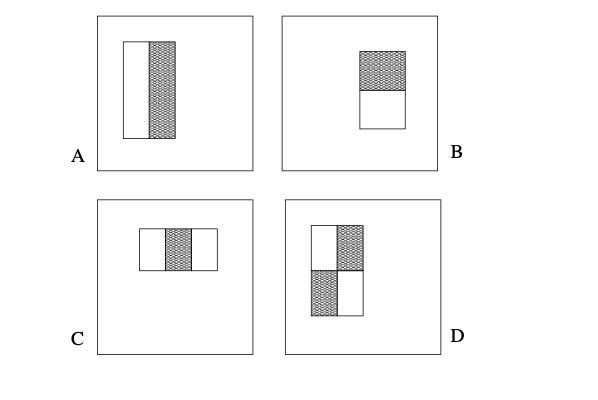

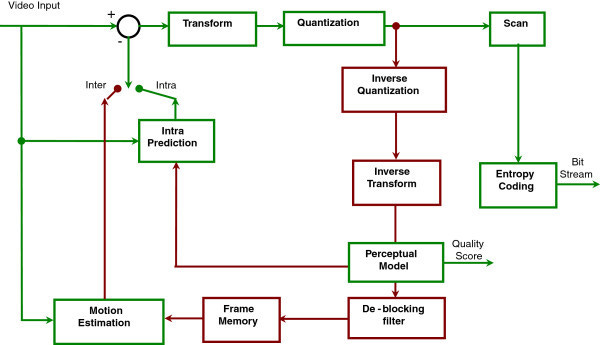

A video codec works by computing the next frame of decoded video from the previous one, based on data stored in numerical (digitised) form. The H264 format, the most common one, used in eg MPEG files, uses motion elimination and inter-frame prediction to reduce the amount of data stored. This is a “lossy” storage model, so the picture is not produced perfectly but it’s tailored to our human perceptual quirks so we don’t notice it and the picture looks like what we expect.

Image credit: Karthikeyan R, Sainarayanan G, Deepa SN - By CC-BY-SA 4.0

But you don’t care - its “just video”. But guess what, you’d never say, despite the complexity of this encoding process, that this was “intelligent” even though its modelling aspects of our perceptual system. So, just for a minute - imagine how far you would get if you tried to rip-off the latest Disney movie from the cutting room and sell the MPEG file before the box office opened, and say “Oh, its trained”.

AI Models Have the Original Training Data

It's considered a problem if a deployed AI model is able to regurgitate the original training data. It’s called “memorisation”.

But security researchers have done just that:

This happened at the end of 2023, and the response was not “Oh, we’ll stop storing other peoples work in our AI”.

The response was not even, “We’ll fix our AI so that it is proof against people recovering the original data from it”

When AI companies try to fix this, they can't - because storing other peoples work in an AI is their business model. The best they can do is try to stop prompting to get the stored data out. That's why even after the "exploit" was reported, and “patched” the problem still persisted.

So poetic that AI companies are fighting to stop exploitation of AI systems, to hide the fact that they are exploiting actual people.

So poetic that AI companies are fighting to stop exploitation of AI systems, to hide the fact that they are exploiting actual people. They don't care about making people more productive: they care about stealing other peoples work, and profiting from it.

6. AI is Hideously Insecure and Cannot be Fixed

In computing after we invented database systems, we discovered that they were vulnerable to hacking, by a type of attack called “SQL Injection”. This basically can be translated thus:

When we made databases years ago we thought it would be good to have a simple human readable input system where you could type anything and get data back. But we forgot that bad people could also trick us into sending input, and then really bad stuff would happen.

We cannot fix the root cause of SQL injection attacks. All we do now is try to stop the special characters that would allow it from getting into our systems.

AI has the same problem. It also takes human readable input.

That would be bad if there was already exploits out there that use this. Oh - there is.

The spAIware exploit mentioned can execute arbitrary code on your computer, because AI doesn't treat data differently from instructions.

That's it's model - get your prompt, get the context, including any data from the web. Or malware masquerading as "data".

And they can't fix it.

In my opinion the "memory" feature, and especially AI agents, are insecure by design.

Anyone using one of these systems is basically opening themselves up to their entire system being remotely pwned.

AI systems crave context

A big problem for AI systems builders is that without all the context to respond to the prompt AI’s can come off looking pretty stupid. If you ask an AI a question it generally doesn’t know something you asked it 5 minutes ago, which would give a huge hint about what the correct answer would be.

To solve this problem, AI companies want to push persistent processes and storage into the client end of the users of their system. Chat interfaces can be good for this, because you can say several things over time. An AI agent is good for context, because its basically not much more than a prompt running in a loop, that performs various tasks, keeping context all the time - including access to all of your documents.

The idea of an AI “memory” was thus super appealing to AI companies, even though Software Engineers like me have a heart attack about the idea, because its basically like opening a vein to OpenAI and allowing whomever is on the other end to pump in there whatever they want.

They tried to fix AI Memory and Can’t

The idea of an AI “memory” was thus super appealing to AI companies, even though Software Engineers like me have a heart attack about the idea, because its basically like opening a vein to OpenAI and allowing whomever is on the other end to pump in there whatever they want.

In my opinion the "memory" feature, and especially AI agents, are insecure by design. Anyone using one of these systems is basically opening themselves up to their entire system being remotely pwned.

Summary

Don’t use Generative AI systems from companies that are being sued by anyone for any reason, and inform yourself about how the rights of people to their own work are being violated for a quick buck.

Thanks for reading!

Got anything you want to ask or share? Please subscribe and join the discussion.